KIWI: An environment for capturing the Perceptual Cues of an application for an Assisting Conversational Agent

Contents |

1. Role of an Assisting Conversational Agent

| LEA (LIMSI Embodied Agent) in action |

An Embodied Conversational Agent (ECA) could be the focal point (single input) to the urge of assistance (embodiment of assistance) when ordinary users put questions in natural language (natural interaction). In a word, the agent acts as the mediator between the user and the internal help system. Actually, embodied agents have been used in assistance, tutoring and learning applications, and several studies have comforted the results of Lester et al on the persona effect, showing better performance, better agreeability etc. when virtual characters are used. |

2. The need for perceptual models

An assisting agent which tries to understand the user's request and which exploits only the accessible information from the internal structure (i.e. the HTML code) cannot find the referred to group within the application because it has no actual implementation. We need then to introduce another representation, the model, between the assisting agent and the application which can deal with the perceptual view points of the users, especially novice ones.

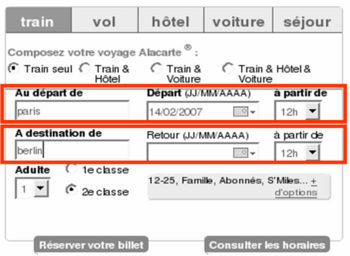

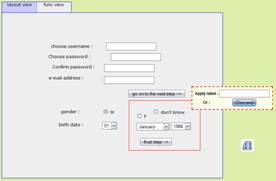

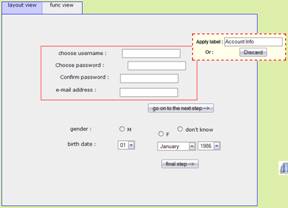

This leads to a crucial point: a key feature for the agent is the capacity to link references perceived by the user to their formal counterpart, which is a difficult process. The following figures exemplify what we called the cognitive drift.

| instead of: |

Example of a cognitive drift : users may tend to perceive a horizontal organization of components in the layout (left) as the developpers breaks the lines in two differents HTML tags (right).

The key issue is the question of the acquisition of semantic knowledge about the structure and the functioning of the applications in the consumer field. Moreover, we want to develop a framework which is as generic as possible, i.e. that can handle new applications with little adaptation efforts.

3. KIWI: An experimental framework online

The Kiwi framework has been developed to support and to study the feasibility of our methodology for the building of assisting models from the applications. The general architecture is decomposed into three main processing modules: the client, the server and the modeler (or modeling agent).

- The client, developed in JavaScript, supports the interactive web-based designing interface. This interface is divided into several frames, each one being associated with one of the viewpoints discussed before. The designing interface has two purposes: first it is used to display the information issuing from the assisting environment and second it is used to register the actions of the designer in order to build the model.

- The server, developed with a JSP servlet and the Tomcat technology, is just used to transfer the client information to and fro the modeler.

- The modeler, developed with the Wolfram Research symbolic environment Mathematica 5.2, is the core of the Kiwi architecture. The modeler makes handles a component ontology in order to display the information that are significant at a given time (e.g. what operations are associated with a given component). But the main role of the modeler is to build an assisting model of the application from the information provided explicitly or implicitly by the client.

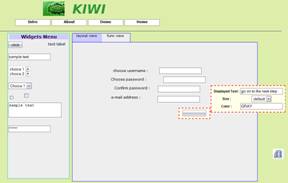

The GUI of the client displays two separate developing viewpoints on the currently designed application: the graphical view and the functional view.

| To the graphical view is associated a menu which represents the complete set of widgets that are available. These widgets will act, in the model, as entry points to the application. The designer can chose a widget and can drag&drop it on the main display area which represents the main window of the future application. For the time being, we are restricting ourselves to applications with a single window. | The passage to the functional view has the effect of blurring the view of the components. When the designer moves the mouse over a component, the list of the available action associated with the component is displayed. Then the designer needs only to move the chosen action towards the target component. |

The KIWI environment records users's actions as elements used to build the model that reflects what the designer is doing. But these elements are also used as an input for an aggregative algorithm based on the gestalt theory. It computes the sets of components that are likely to be aggregated as part of a coherent group from a perceptual point a view. The computed groups are then displayed on screen as a feedback for the designer who can, in turn performs two operations :

- Discard the entity as irrelevant.

- Affix to the structure an annotation in natural language that describes the semantics of the item.

|

Discard irrelevant aggregate |

Annotation in natural language |

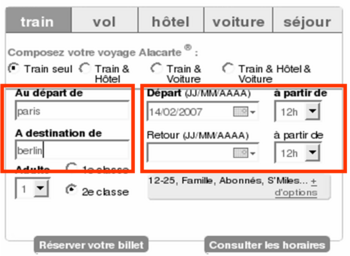

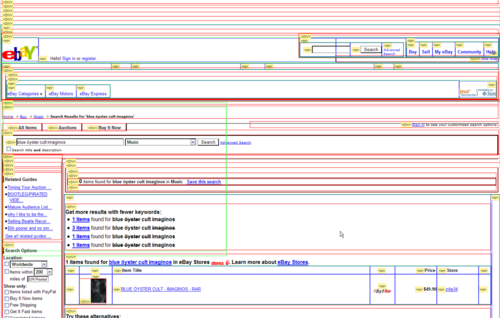

This process ends with the creation of a model where significant groups of components are annotated by a human operator. However in order to be complete, we need to develop a methodology to acquire efficient models from pre-existing applications that may require assistance. The previous work can be used in combination with the analysis of websites from real-world in order to confront models obtained from human designers and models from automatic extraction (this process relies on the same principle as previously except that there is no manual validation). Thus the goal of the confrontation would be to exhibit generic heuristics used consequently to improve the automatic synthesis of models for Assisting Conversational Agents.

|

Sample of the SNCF website used to validate the perceptual algorithm |

Raw analysis of the ebay website exhibiting the complexity of the underlying HTML structure |

References

For more Information and online documentation: see The Daft project homepage

- D. Leray, Jean-Paul Sansonnet, Assisting Dialogical Agents Modeled from Novice User's Perceptions, 11th International Conference on Knowledge-Based and Intelligent Information & Engineering Systems, KES'07, September 12-14, Vietri Sul Mare, Italy

- J-P. Sansonnet, D. Leray, Kiwi: An environment for capturing the Perceptual Cues of an Application for an Assisting Conversational Agent, Conference AISB'07, workshop on language, Speech and gesture, Newcastle GB, 2-4 April 2007

- D. Leray, J-P. Sansonnet, Ordinary user oriented model construction for Assisting Conversational Agents, International Workshop on Communication between Human and Artificial Agents, CHAA'06 at IEEE-WIC-ACM Conference on Intelligent Agent Technology, Hong Kong, dec 2006

- D. Leray, J-P. Sansonnet, Towards more Perceptual Models for Assisting Conversational Agents, The International Conference on Intelligent Virtual Environments and Virtual Agents IVEVA '06, Aguascalientes (Mexico) Nov 2006

- J-P. Sansonnet, D. Leray, J-C. Martin, Architecture of a Framework for Generic Assisting Conversational Agents, short presentation at Intelligent Virtual Agents Conference, published in LNAI 4133 pp 145-156, Marina del Rey (CA) Aug 2006