Multimodal 2D/3D interaction for editing and navigating urban spaces

Rami Ajaj Christian Jacquemin Frédéric Vernier

Contents |

Abstract

The expansion of augmented and virtual reality in various application fields raises the question of multimodal interfaces in 3 Dimensional (3D) enhancement. Our research focuses on multimodal visual input and output by combining 3D views with 2D views offered to multiple users (see figure 1). The setting we chose to study combines a 2D view browsed on a collaborative tabletop device and a 3D stereoscopic view projected on a wall screen. This setting is interesting for its complementarity views and concurrent 2D and 3D interactions on geometrical objects. The concurrence of interactions is a major issue in this type of combination. We have formalized this problem of sharing the different modes of interaction through a Petri net model. We implementated this model for 3D object positionning and allowed a division of the Degrees Of Freedom (DOF) by the users.

Scientific issues in concurrent 2D/3D interfaces

The use of multimodal interfaces is increasing in virtual and augmented reality field. Various works concentrate on multimodal input interfaces by combining gesture and oral interactions to compensate the lack of mouse-level usable 3D device. Other works focus on multimodal output systems by combining visual and haptic feedbacks to improve user immersive experience. Multimodal system refers to a system allowing multiple communication channels. We do not focus on multimodal input or output systems separately but on combined visual multimodal input and output. The goal of this combination is to link collaboration and the lack of a mouse-like 3D input device. Our system enables two different types of presentation of the same virtual world. Both views are complementary (when a piece of information perceived through one view is not perceived through the other one) and redundant (the same piece of information can be perceived through both views).

- The design of our system revealed some interesting problems in 2D/3D multimodality. Since interaction occurs through both views in a synchronous way, the problem of concurrence arises.

- The use of two different views brings up the problems of complementarities of these views and brushing (modifications done through one view made visible in real time on all views).

Experimental Settings And Software Architecture

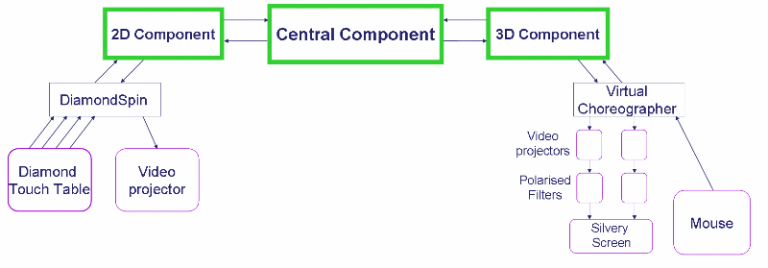

We combine a 3D immersive view projected on a wall screen and a 2D view projected on a collaborative tabletop device called DiamondTouch (developed by MERL). The 2D view can be seen as a bird eye view of the virtual scene showing one or more cameras. Cameras represent the position of the point of view and the orientation of the 3D view(s) in the virtual world. Our solution to the concurrence problem is based on splitting the DOF of manipulation of a virtual object between 2D and 3D view. It relies on a software architecture with three components (See figure 2):

- 2D Component: tabletop collaborative application through DiamondSpin library.

- 3D Component: 3D view application through Virtual Choreographer.

- Central Component: Component that contains all the geometric data so that it can guarantee independence of 2D and 3D components. This component manages all the communication between the 2D and 3D component.

Concurrence Management

Concurrence management is a major issue in this study that is addressed through two types of multimodal interaction:

- Exclusive mode, allows the manipulation of one of the two representations (2D or 3D) of the same 3D virtual object at the same time. We manage the concurrence at the selection of object level in order to couple the block feedback through one view and the select feedback through the other.

- Cooperative mode, allows simultaneous manipulation of the different representations of the same virtual object in a complementary way. The interactions performed at the same time and on the same object are made with different DOF for each view. We now detail these two modes.

Exclusive mode

In this mode, one virtual object can be manipulated through one and only one of the 2D or 3D components at the same time. To implement this mode, we designed an independent Petri net (Figure 3) for each virtual object in the central component. The Petri net of a given object manages who gets the access right to manipulate it. It also manages if another user requests to manipulate the same object through another component.

Cooperative mode

In this mode, simultaneous interaction with an object through the 2D and 3D interfaces is made possible. To implement this mode we designed Petri net (Figure 4) for each spatial attribute related to a DOF of an object. The Petri nets indicate which component has the permission to change the given attribute of the given object (e.g. a Petri net like in figure 8 now exists for the x-position of a virtual object). As we can see, the Petri net designed on each attribute of an object are more complex than the Petri net designed on each object for the exclusive mode. This complexity arises with the need to block some types of interaction. We consider blocking interactions through one component if a given type of interaction is made through the other one (e.g. rotation of an object through the 2D view is not allowed when the same object is translated through the other view).

Discussion

The application domain we used to guide the design of our system is architecture and urbanism. The use of virtual and augmented reality is studied more and more in these domains. This application field inspired us mostly because of the different modalities used by the multiple actors in such projects. Architects use to work on 2D plans and have to imagine easily the global 3D form of the project. Craftsmen are also used to work with 2D plans. On the other hand, customers wish to visualise a realistic view of the project in 3D to make decisions. More over, manipulation through both views is very pertinent because all these actors need to work together. Architectural design field supports plenty of different representations and incites us to improve our 2D presentation of 3D virtual objects. It also suggests us to explore new collaborative interaction techniques for object deformations.

Conclusion and Perspectives

We have designed a software architecture based on three components that allows synchronous interactions through a 2D collaborative view and a 3D stereoscopic view. We are focusing now on the design of new combined interaction techniques using the same software architecture but with new tasks and various 3D input devices. There are two goals for this future work. The first one is to draw up rules that a designer of a combined 2D/3D interaction technique should respect. The second is to evaluate and compare several interaction techniques that combine 2D and 3D interactions in the domain of architecture and urbanism.

References

R. Ajaj, C. Jacquemin and F. Vernier. User collaboration for 3D manipulation through multiple shared 2D and 3D views. In Proceedings of VRIC 07, Laval, France, 2007.

R. Ajaj. Combinaison multimodale 2D/3D : application à l’architecture. Master Research Thesis (in french), September 2006.